A word2vec model is a simple neural network model with a single hidden layer. The task of this model is to predict the nearby words for each and every word in a sentence.

Applying word2vec Model on Non-Textual Data

Fundamental :

It is the sequential nature of the text. Every sentence or phrase has a sequence of words. In the absence of this sequence, we would have a hard time understanding the text. Just try to interpret the sentence below:

“these most been languages deciphered written of have already”

There is no sequence in this sentence. It becomes difficult for us to grasp it and that’s why the sequence of words is so important in any natural language. This very property got me thinking about data other than text that has a sequential nature as well.

The purchases made by the consumers on E-commerce websites. Most of the time there is a pattern in the buying behavior of the consumers.

If we can represent each of these products by a vector, then we can easily find similar products. So, if a user is checking out a product online, then we can easily recommend him/her similar products by using the vector similarity score between the products.

The Word2vec Skip-gram model is a shallow neural network with a single hidden layer that takes in a word as input and tries to predict the context of words around it as output. Let’s take the following sentence as an example:

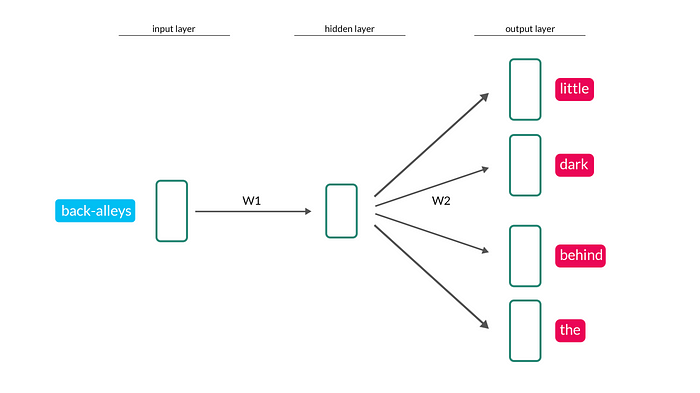

In the sentence above, the word ‘back-alleys’ is our current input word, and the words ‘little’, ‘dark’, ‘behind’ and ‘the’ are the output words that we would want to predict given that input word. Our neural network looks something like this:

If two different words largely appear in similar contexts, we expect that, given any one of those two words as input, the neural network will output very similar predictions as output. And we have previously mentioned that the values of the weight matrices control the predictions at the output, so if two words appear in similar contexts, we expect the weight matrix values for those two words to be largely similar.

Need the algorithm ? mail : avik_das_2017@cba.isb.edu