Objective of a Recommendation System:

Recommendation should be :

- that is relevant

- but not well-know to that user

- user can’t discover these items on their own easily

- may offer a personalized experience

- help user to discover new, relevant objects which will be beyond user’s imagination

Recommendation Metric :

- Prediction /Statistical accuracy metrics (MAE, RMSE) : The two most popular metrics in these group are MAE (mean absolute error) and RMSE (root mean squared error). These two metrics are particular cases of the Minkowski metric. The goal of these metrics is to measure how numerically close is your prediction from your real value. MAE punishes every error the same while RMSE punishes more larger errors.Decision support accuracy metricsDecision support accuracy metrics

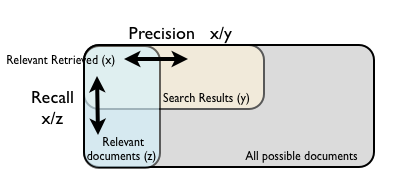

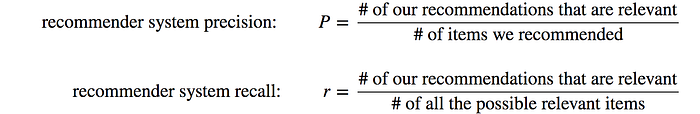

- Decision support accuracy metrics ( Precision, Recall, F1 score ) : Help users to select items that are more similar among available set of items. The metrics view the recommended predictions a binary operation which distinguishes good/relevant items from those items that are not good/not relevant. The precision is the proportion of recommendations that are good recommendations, and recall is the proportion of good recommendations that appear in top recommendations.

Precision-Recall-F1 Score in Details :

In information retrieval, precision is a measure of result relevancy, while recall is a measure of how many truly relevant results are returned.

A system with high recall but low precision returns many results, but most of its predicted labels are incorrect when compared to the training labels.

A system with high precision but low recall is just the opposite, returning very few results, but most of its predicted labels are correct when compared to the training labels.

An ideal system with high precision and high recall will return many results, with all results labeled correctly.

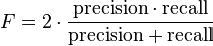

In recommendations domain,F1-Score is considered as an single value obtained combining both the precision and recall measures , and indicates an overall utility of the recommendation list.

Sample Code : Precison-Recall_F1-Score

from sklearn import svm, datasets

from sklearn.model_selection import train_test_split

import numpy as np

iris = datasets.load_iris()

X = iris.data

y = iris.target

# Add noisy features

random_state = np.random.RandomState(0)

n_samples, n_features = X.shape

X = np.c_[X, random_state.randn(n_samples, 200 * n_features)]

# Limit to the two first classes, and split into training and test

X_train, X_test, y_train, y_test = train_test_split(X[y < 2], y[y < 2],

test_size=.5,

random_state=random_state)

# Create a simple classifier

classifier = svm.LinearSVC(random_state=random_state)

classifier.fit(X_train, y_train)

y_pred = classifier.predict(X_test)

#y_score = classifier.decision_function(X_test)

print(f1_score(y_test, y_pred, average=”macro”))

print(precision_score(y_test, y_pred, average=”macro”))

print(recall_score(y_test, y_pred, average=”macro”))

'binary':

Only report results for the class specified by pos_label. This is applicable only if targets (y_{true,pred}) are binary.

'micro':

Calculate metrics globally by counting the total true positives, false negatives and false positives.

'macro':

Calculate metrics for each label, and find their unweighted mean. This does not take label imbalance into account.

'weighted':

Calculate metrics for each label, and find their average weighted by support (the number of true instances for each label). This alters ‘macro’ to account for label imbalance; it can result in an F-score that is not between precision and recall.

'samples':Calculate metrics for each instance, and find their average (only meaningful for multilabel classification where this differs from accuracy_score).

NDCG ( Normailzed Discounted cumulative gain )

MAP is a metric for binary feedback only, while NDCG can be used in any case where you can assign relevance score to a recommended item (binary, integer or real).

Two assumptions are made in using DCG and its related measures.

- Highly relevant documents are more useful when appearing earlier in a search engine result list (have higher ranks)

- Highly relevant documents are more useful than marginally relevant documents, which are in turn more useful than non-relevant documents.

DCG originates from an earlier, more primitive, measure called Cumulative Gain.

NDCG

Intimidating as the name might be, the idea behind NDCG is pretty simple. A recommender returns some items and we’d like to compute how good the list is. Each item has a relevance score, usually a non-negative number. That’s gain. For items we don’t have user feedback for we usually set the gain to zero.

Now we add up those scores; that’s cumulative gain. We’d prefer to see the most relevant items at the top of the list, therefore before summing the scores we divide each by a growing number (usually a logarithm of the item position) — that’s discounting — and get a DCG.

DCGs are not directly comparable between users, so we normalize them. The worst possible DCG when using non-negative relevance scores is zero. To get the best, we arrange all the items in the test set in the ideal order, take first K items and compute DCG for them. Then we divide the raw DCG by this ideal DCG to get NDCG@K, a number between 0 and 1.

Code

import numpy as np

# change this if using K > 100

denominator_table = np.log2( np.arange( 2, 102 ))

def dcg_at_k( r, k, method = 1 ):

“””Score is discounted cumulative gain (dcg)

Relevance is positive real values. Can use binary

as the previous methods.

Example from

http://www.stanford.edu/class/cs276/handouts/EvaluationNew-handout-6-per.pdf

>>> r = [3, 2, 3, 0, 0, 1, 2, 2, 3, 0]

>>> dcg_at_k(r, 1)

3.0

>>> dcg_at_k(r, 1, method=1)

3.0

>>> dcg_at_k(r, 2)

5.0

>>> dcg_at_k(r, 2, method=1)

4.2618595071429155

>>> dcg_at_k(r, 10)

9.6051177391888114

>>> dcg_at_k(r, 11)

9.6051177391888114

Args:

r: Relevance scores (list or numpy) in rank order

(first element is the first item)

k: Number of results to consider

method: If 0 then weights are [1.0, 1.0, 0.6309, 0.5, 0.4307, …]

If 1 then weights are [1.0, 0.6309, 0.5, 0.4307, …]

Returns:

Discounted cumulative gain

“””

r = np.asfarray(r)[:k]

if r.size:

if method == 0:

return r[0] + np.sum(r[1:] / np.log2(np.arange(2, r.size + 1)))

elif method == 1:

# return np.sum(r / np.log2(np.arange(2, r.size + 2)))

return np.sum(r / denominator_table[:r.shape[0]])

else:

raise ValueError(‘method must be 0 or 1.’)

return 0.

def get_ndcg( r, k, method = 1 ):

“””Score is normalized discounted cumulative gain (ndcg)

Relevance orignally was positive real values. Can use binary

as the previous methods.

Example from

http://www.stanford.edu/class/cs276/handouts/EvaluationNew-handout-6-per.pdf

>>> r = [3, 2, 3, 0, 0, 1, 2, 2, 3, 0]

>>> ndcg_at_k(r, 1)

1.0

>>> r = [2, 1, 2, 0]

>>> ndcg_at_k(r, 4)

0.9203032077642922

>>> ndcg_at_k(r, 4, method=1)

0.96519546960144276

>>> ndcg_at_k([0], 1)

0.0

>>> ndcg_at_k([1], 2)

1.0

Args:

r: Relevance scores (list or numpy) in rank order

(first element is the first item)

k: Number of results to consider

method: If 0 then weights are [1.0, 1.0, 0.6309, 0.5, 0.4307, …]

If 1 then weights are [1.0, 0.6309, 0.5, 0.4307, …]

Returns:

Normalized discounted cumulative gain

“””

dcg_max = dcg_at_k(sorted(r, reverse=True), k, method)

dcg_min = dcg_at_k(sorted(r), k, method)

#assert( dcg_max >= dcg_min )

if not dcg_max:

return 0.

dcg = dcg_at_k(r, k, method)

#print dcg_min, dcg, dcg_max

return (dcg — dcg_min) / (dcg_max — dcg_min)

# ndcg with explicitly given best and worst possible relevances

# for recommendations including unrated movies

def get_ndcg_2( r, best_r, worst_r, k, method = 1 ):

dcg_max = dcg_at_k( sorted( best_r, reverse = True ), k, method )

if worst_r == None:

dcg_min = 0.

else:

dcg_min = dcg_at_k( sorted( worst_r ), k, method )

# assert( dcg_max >= dcg_min )

if not dcg_max:

return 0.

dcg = dcg_at_k( r, k, method )

#print dcg_min, dcg, dcg_max

return ( dcg — dcg_min ) / ( dcg_max — dcg_min )